NPS is a popular measurement, but does it work for learning?

TECHNOLOGY – By Steven B. Just, Ed.D.

These days we’re increasingly seeing the use of Net Promoter Scores (NPS) as a measure of learner satisfaction in Level One surveys. Borrowed from the field of market research, it’s popular because it’s a single number, quickly understood and easily grasped by management.

Unfortunately, using it within a learning context is wrong! Why? This article will detail several reasons.

What Is a Net Promoter Score?

For those not familiar with NPS, it was first introduced around 18 years ago as a measure of customer satisfaction for products and services. Since its introduction, it has exploded in popularity. The basic premise is that a company asks its customers one simple question:

How likely are you to recommend our products or services on a scale of 0 to 10?

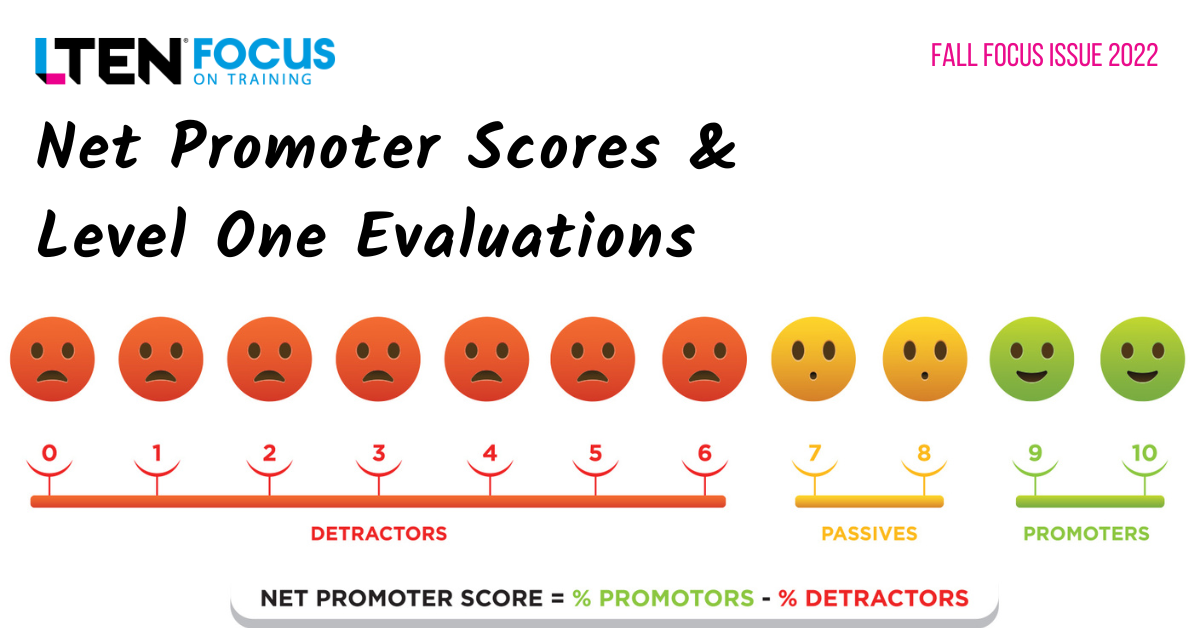

The respondents are categorized as:

- Detractors 0-6

- Passives 7-8

- Promoters 9-10

The percent of detractors is subtracted from the percent of promoters, yielding the NPS. (See Figure 1)

While any score above zero is technically good, generally scores above 50 are considered excellent.

Use of NPS in Learning

It’s not hard to understand why the learning and development function has co-opted this measure for Level One surveys. Our learners gave us an NPS of 80! Our courses are great!

So, why is this wrong? To understand what is wrong we need to dive just a little bit into learning measurement theory.

For any learning measure to be meaningful it must be valid. What does this mean? There are several measures of validity but the two that concern us here are:

- Construct Validity: Are you measuring what you think you are measuring? Are you using the correct tool? Is the metric you produced meaningful for the purpose at hand? For example, let’s say someone gave you a box and asked you to weigh it, so you took out a yardstick and reported that it was 16 inches. Now, that yardstick is a valid measurement instrument, but it’s not providing

meaningful data for the purpose it is being used. - Predictive Validity: Is your measurement predictive of some desired behavior? For example, are high college grades in challenging math courses predictive of success in a graduate science program? Perhaps not 100%, but there’s probably a strong correlation.

Now let’s consider NPS as a measure of learner satisfaction and see if it has either construct or predictive validity.

NPS and Construct Validity

Consumers are usually pretty good at determining if they like the quality of a product or service. But are learners good judges of learning experiences? Decades of research provide a clear answer to this question: No.

Learners “like” a learning experience for a variety of reasons (they like the instructor, they like the learning environment, they enjoy the slides, the graphics, etc.). But decades of research have shown that they are very bad judges of what is effective learning and how much they have learned. (This field of study is called judgments of learning.)

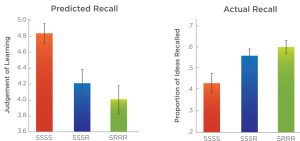

For example, Figure 2 shows how in one study (Karpicke, Butler and Roediger, 2009), you can see the differences among three groups of learners using three different learning strategies. On the left are how much learners in each group thought they learned and on the right are their actual test results – exactly the opposite of what they thought.

NPS and Predictive Validity

What do high NPS scores predict? Learner satisfaction is not bad in and of itself, but does it predict important learner behaviors? Does it correlate with learning or learning transfer? Again, dozens of research studies have demonstrated that the answer is no.

For example, in one study (Gessler, 2009), the author looked for correlations among learner satisfaction (Level One), learning success (Level Two) and transfer performance (Level Three). (While he didn’t explicitly look at NPS, NPS measures learner satisfaction.) Here is a summary of the results:

- There was “no significant correlation between satisfaction and learning success.”

- There was “no significant correlation between satisfaction and transfer performance.”

Conclusion

NPS may work as a measure of consumer satisfaction, but in learning it has neither construct nor predictive validity. For these reasons it has no place in learning evaluation.

There are non-NPS survey methods for measuring learner satisfaction, which are fine, as long as you understand that satisfaction does not correlate with learning or learning transfer.

Of course, if anyone has a different take on this, I would love to hear from you.

Steven B. Just, Ed.D., is CEO of Princeton Metrics. He can be reached at sjust@princetonmetrics.com.